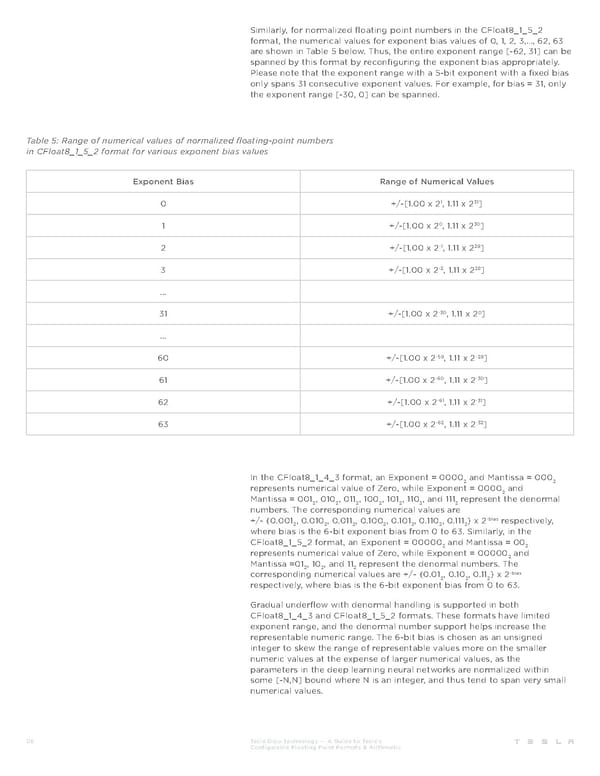

Similarly, for normalized floating point numbers in the CFloat8_1_5_2 format, the numerical values for exponent bias values of 0, 1, 2, 3,…, 62, 63 are shown in Table 5 below. Thus, the entire exponent range [-62, 31] can be spanned by this format by reconfiguring the exponent bias appropriately. Please note that the exponent range with a 5-bit exponent with a fixed bias only spans 31 consecutive exponent values. For example, for bias = 31, only the exponent range [-30, 0] can be spanned. Table 5: Range of numerical values of normalized floating-point numbers in CFloat8_1_5_2 format for various exponent bias values Exponent Bias Range of Numerical Values 1 31 0 +/-[1.00 x 2, 1.11 x 2 ] 0 30 1 +/-[1.00 x 2 , 1.11 x 2 ] -1 29 2 +/-[1.00 x 2 , 1.11 x 2 ] -2 28 3 +/-[1.00 x 2 , 1.11 x 2 ] ... -30 0 31 +/-[1.00 x 2 , 1.11 x 2 ] ... -59 -29 60 +/-[1.00 x 2 , 1.11 x 2 ] -60 -30 61 +/-[1.00 x 2 , 1.11 x 2 ] -61 -31 62 +/-[1.00 x 2 , 1.11 x 2 ] -62 -32 63 +/-[1.00 x 2 , 1.11 x 2 ] In the CFloat8_1_4_3 format, an Exponent = 0000 and Mantissa = 000 2 2 represents numerical value of Zero, while Exponent = 0000 and 2 Mantissa = 001 , 010 , 011 , 100 , 101 , 110 , and 111 represent the denormal 2 2 2 2 2 2 2 numbers. The corresponding numerical values are -bias +/- {0.001 , 0.010 , 0.011 , 0.100 , 0.101 , 0.110 , 0.111 } x 2 respectively, 2 2 2 2 2 2 2 where bias is the 6-bit exponent bias from 0 to 63. Similarly, in the CFloat8_1_5_2 format, an Exponent = 00000 and Mantissa = 00 2 2 represents numerical value of Zero, while Exponent = 00000 and 2 Mantissa =01 , 10 , and 11 represent the denormal numbers. The 2 2 2 -bias corresponding numerical values are +/- {0.01 , 0.10 , 0.11 } x 2 2 2 2 respectively, where bias is the 6-bit exponent bias from 0 to 63. Gradual underflow with denormal handling is supported in both CFloat8_1_4_3 and CFloat8_1_5_2 formats. These formats have limited exponent range, and the denormal number support helps increase the representable numeric range. The 6-bit bias is chosen as an unsigned integer to skew the range of representable values more on the smaller numeric values at the expense of larger numerical values, as the parameters in the deep learning neural networks are normalized within some [-N,N] bound where N is an integer, and thus tend to span very small numerical values. 06 Tesla Dojo Technology — A Guide to Tesla’s Configurable Floating Point Formats & Arithmetic

Tesla Dojo Technology Page 5 Page 7

Tesla Dojo Technology Page 5 Page 7