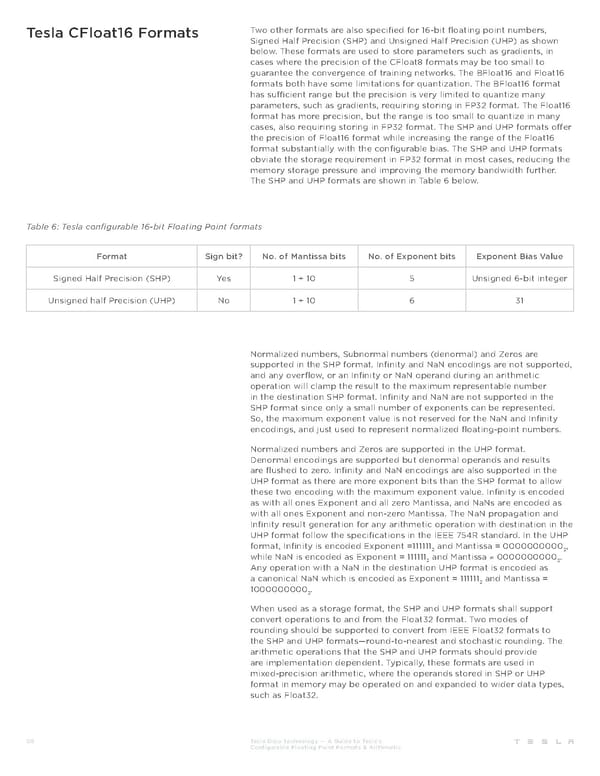

Tesla CFloat16 Formats Two other formats are also specified for 16-bit floating point numbers, Signed Half Precision (SHP) and Unsigned Half Precision (UHP) as shown below. These formats are used to store parameters such as gradients, in cases where the precision of the CFloat8 formats may be too small to guarantee the convergence of training networks. The BFloat16 and Float16 formats both have some limitations for quantization. The BFloat16 format has sufficient range but the precision is very limited to quantize many parameters, such as gradients, requiring storing in FP32 format. The Float16 format has more precision, but the range is too small to quantize in many cases, also requiring storing in FP32 format. The SHP and UHP formats offer the precision of Float16 format while increasing the range of the Float16 format substantially with the configurable bias. The SHP and UHP formats obviate the storage requirement in FP32 format in most cases, reducing the memory storage pressure and improving the memory bandwidth further. The SHP and UHP formats are shown in Table 6 below. Table 6: Tesla configurable 16-bit Floating Point formats Format Sign bit? No. of Mantissa bits No. of Exponent bits Exponent Bias Value Signed Half Precision (SHP) Yes 1 + 10 5 Unsigned 6-bit integer Unsigned half Precision (UHP) No 1 + 10 6 31 Normalized numbers, Subnormal numbers (denormal) and Zeros are supported in the SHP format. Infinity and NaN encodings are not supported, and any overflow, or an Infinity or NaN operand during an arithmetic operation will clamp the result to the maximum representable number in the destination SHP format. Infinity and NaN are not supported in the SHP format since only a small number of exponents can be represented. So, the maximum exponent value is not reserved for the NaN and Infinity encodings, and just used to represent normalized floating-point numbers. Normalized numbers and Zeros are supported in the UHP format. Denormal encodings are supported but denormal operands and results are flushed to zero. Infinity and NaN encodings are also supported in the UHP format as there are more exponent bits than the SHP format to allow these two encoding with the maximum exponent value. Infinity is encoded as with all ones Exponent and all zero Mantissa, and NaNs are encoded as with all ones Exponent and non-zero Mantissa. The NaN propagation and Infinity result generation for any arithmetic operation with destination in the UHP format follow the specifications in the IEEE 754R standard. In the UHP format, Infinity is encoded Exponent =111111 and Mantissa = 0000000000 , 2 2 while NaN is encoded as Exponent = 111111 and Mantissa ≠ 0000000000 . 2 2 Any operation with a NaN in the destination UHP format is encoded as a canonical NaN which is encoded as Exponent = 111111 and Mantissa = 2 10000000002. When used as a storage format, the SHP and UHP formats shall support convert operations to and from the Float32 format. Two modes of rounding should be supported to convert from IEEE Float32 formats to the SHP and UHP formats—round-to-nearest and stochastic rounding. The arithmetic operations that the SHP and UHP formats should provide are implementation dependent. Typically, these formats are used in mixed-precision arithmetic, where the operands stored in SHP or UHP format in memory may be operated on and expanded to wider data types, such as Float32. 08 Tesla Dojo Technology — A Guide to Tesla’s Configurable Floating Point Formats & Arithmetic

Tesla Dojo Technology Page 7 Page 9

Tesla Dojo Technology Page 7 Page 9