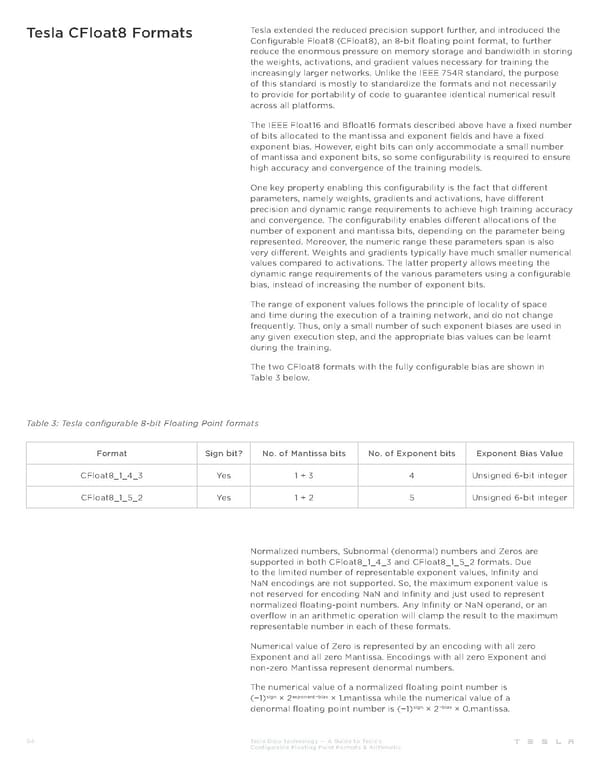

Tesla CFloat8 Formats Tesla extended the reduced precision support further, and introduced the Configurable Float8 (CFloat8), an 8-bit floating point format, to further reduce the enormous pressure on memory storage and bandwidth in storing the weights, activations, and gradient values necessary for training the increasingly larger networks. Unlike the IEEE 754R standard, the purpose of this standard is mostly to standardize the formats and not necessarily to provide for portability of code to guarantee identical numerical result across all platforms. The IEEE Float16 and Bfloat16 formats described above have a fixed number of bits allocated to the mantissa and exponent fields and have a fixed exponent bias. However, eight bits can only accommodate a small number of mantissa and exponent bits, so some configurability is required to ensure high accuracy and convergence of the training models. One key property enabling this configurability is the fact that different parameters, namely weights, gradients and activations, have different precision and dynamic range requirements to achieve high training accuracy and convergence. The configurability enables different allocations of the number of exponent and mantissa bits, depending on the parameter being represented. Moreover, the numeric range these parameters span is also very different. Weights and gradients typically have much smaller numerical values compared to activations. The latter property allows meeting the dynamic range requirements of the various parameters using a configurable bias, instead of increasing the number of exponent bits. The range of exponent values follows the principle of locality of space and time during the execution of a training network, and do not change frequently. Thus, only a small number of such exponent biases are used in any given execution step, and the appropriate bias values can be learnt during the training. The two CFloat8 formats with the fully configurable bias are shown in Table 3 below. Table 3: Tesla configurable 8-bit Floating Point formats Format Sign bit? No. of Mantissa bits No. of Exponent bits Exponent Bias Value CFloat8_1_4_3 Yes 1 + 3 4 Unsigned 6-bit integer CFloat8_1_5_2 Yes 1 + 2 5 Unsigned 6-bit integer Normalized numbers, Subnormal (denormal) numbers and Zeros are supported in both CFloat8_1_4_3 and CFloat8_1_5_2 formats. Due to the limited number of representable exponent values, Infinity and NaN encodings are not supported. So, the maximum exponent value is not reserved for encoding NaN and Infinity and just used to represent normalized floating-point numbers. Any Infinity or NaN operand, or an overflow in an arithmetic operation will clamp the result to the maximum representable number in each of these formats. Numerical value of Zero is represented by an encoding with all zero Exponent and all zero Mantissa. Encodings with all zero Exponent and non-zero Mantissa represent denormal numbers. The numerical value of a normalized floating point number is sign exponent−bias (−1) × 2 × 1.mantissa while the numerical value of a sign −bias denormal floating point number is (−1) × 2 × 0.mantissa. 04 Tesla Dojo Technology — A Guide to Tesla’s Configurable Floating Point Formats & Arithmetic

Tesla Dojo Technology Page 3 Page 5

Tesla Dojo Technology Page 3 Page 5